Introduction

The growing prevalence of mental health disorders—such as depression, anxiety, and stress—calls for innovative, real-time approaches to detection and intervention. Traditional assessment methods, often dependent on sporadic self-reports and clinical interviews, are inherently limited by subjectivity and infrequent data sampling. In response to these constraints, this research proposes a comprehensive digital phenotyping framework that utilizes multimodal data sources including smartphone sensor data, web activity, voice signals, and facial expressions. By continuously monitoring these data streams, the system captures subtle affective cues and transitions that are often missed by conventional diagnostic tools. The integration of paralinguistic vocal features and micro-expressions augments the fidelity of emotional state detection, enabling higher granularity in mood analysis.

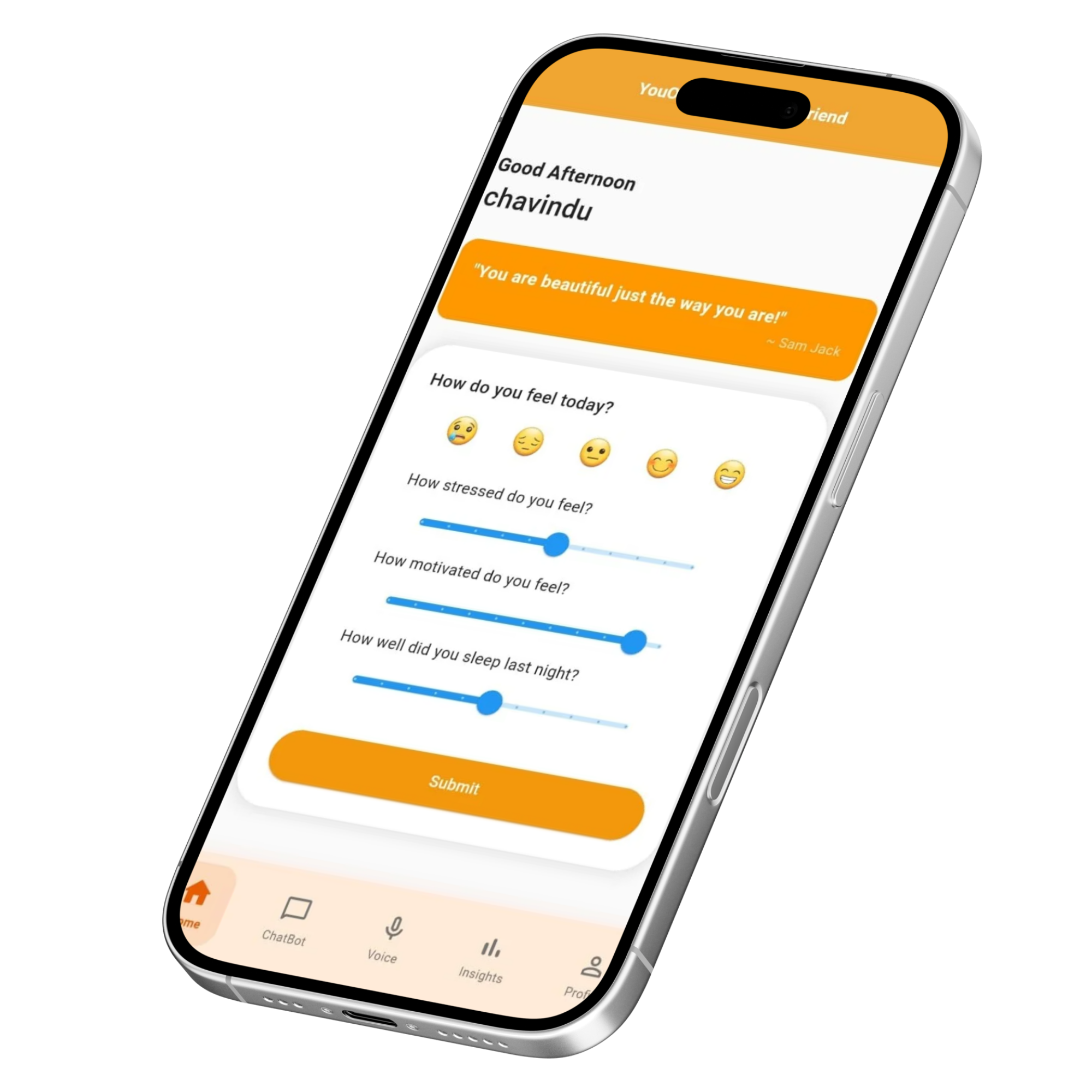

Our proposed solution combines a mobile application and web browser extension to collect, analyze, and interpret diverse behavioral and physiological signals using advanced machine learning models. The system is designed not only to detect emotional states and transitions with high predictive accuracy but also to deliver personalized, just-in-time interventions tailored to the user's emotional state. Furthermore, the architecture ensures data privacy through secure, privacy-preserving protocols. This integrated, scalable framework aims to bridge the gap between traditional mental health diagnostics and real-world, continuous emotional monitoring—offering a proactive and personalized approach to mental well-being.